Artificial Intelligence

Artificial Intelligence to meet your challenges and go further

Discover Coexya's historical approach to Artificial Intelligence (AI) and Data Sciences.

Big data, cloud and digital transformation, the foundations of AI

Improving processes and making them more reliable, more efficient, better adapted and more personalised is at the heart of our approach to providing increasingly relevant responses to your challenges.

Digital transformation, Big Data, open data, more powerful servers and the cloud are all advances that have facilitated the deployment of platforms and solutions, each more innovative than the last, without the need for substantial investment.

These developments have spawned a meteoric rise in new developments, with Artificial Intelligence and Data Sciences being the most emblematic of the last 10 years. Like all new technologies, they are having an impact on our businesses and will open up new opportunities as yet unknown.

Coexya, strong expertise in data processing

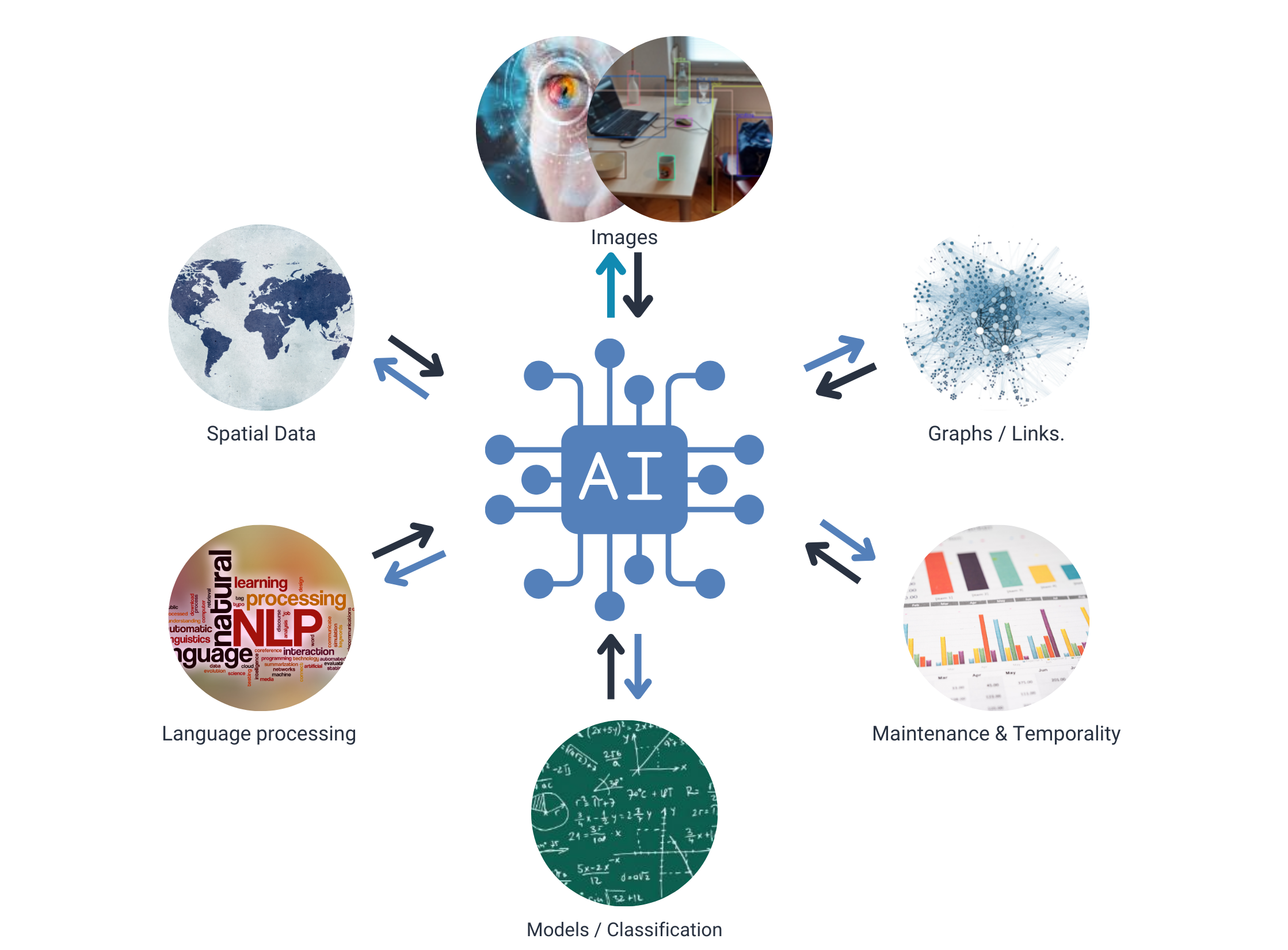

And where does Coexya fit in? With over 20 years’ experience, Coexya is one of the recognised specialists in data processing, both structured and unstructured (text, 2D and 3D images, sound, video), from capture to enhancement via the design and implementation of solutions tailored to different use cases.

Artificial Intelligence at the heart of our business solutions

2000 à 2012, Coexya réalise un grand nombre de projets basés sur des IA symboliques.

From 2000 to 2012, Coexya carried out a large number of projects based on symbolic AI.

Our significant achievements in automatic language processing (NLP) technologies:

- Industrial Property: measurement of similarity between images and automatic classification

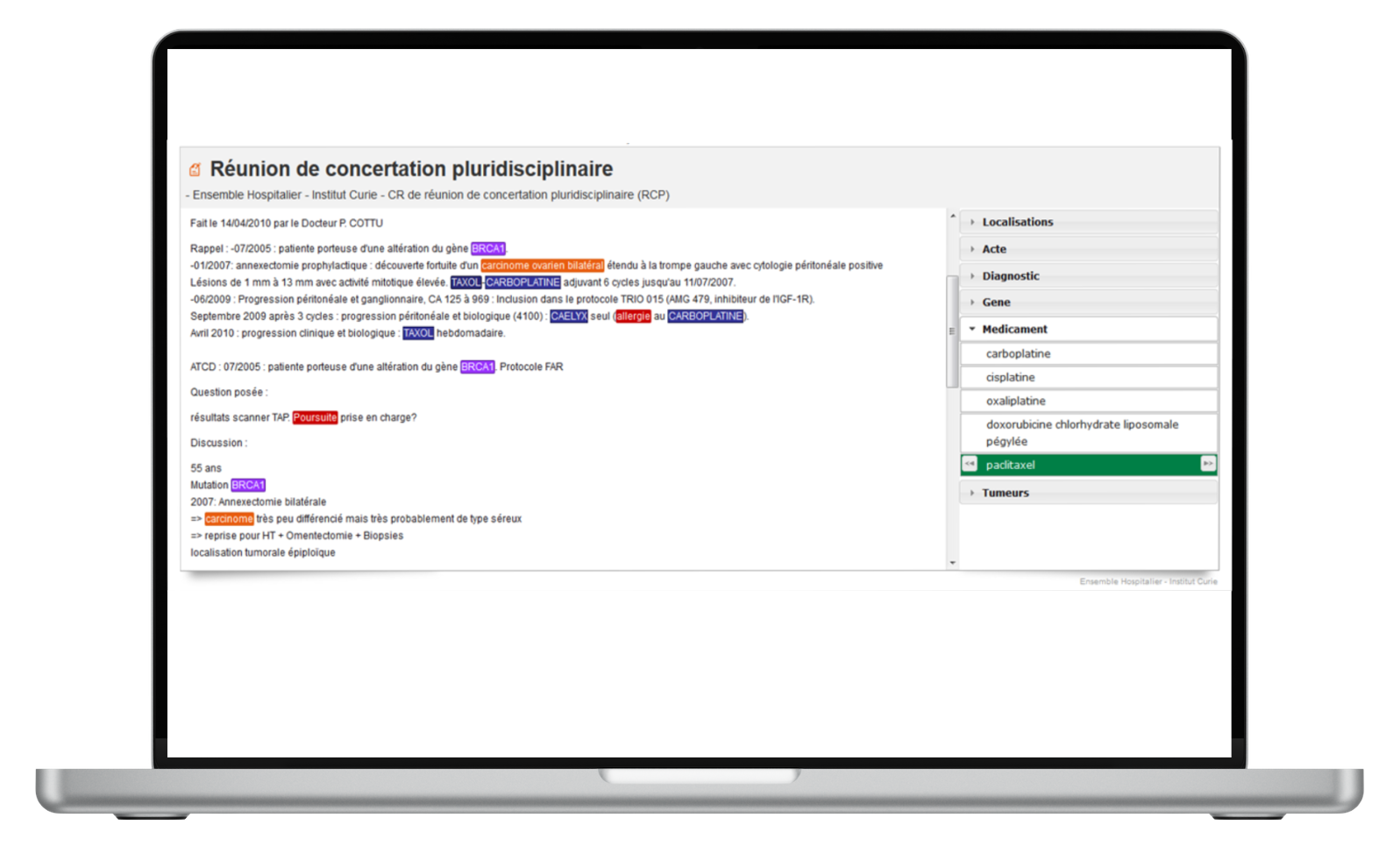

- Health: automatic interpretation of medical reports for patient management in emergency situations (LERUDI)

- Health: automatic interpretation of medical procedure reports to create oncology cohorts (CONSORE)

- Legislative: helping to consolidate legislation in France by analysing the Official Journal and looking for amending measures

- Legislative: anonymisation of case law texts

Symbolic AI: how machines imitate human thought

Symbolic AI: Component that enables reasoning based on formal rules (expert system)

Our view: Easily explained because based on formal rules. On the other hand, it requires upstream codification work by experts in the field.

From 2012 onwards, connectionist AI, driven by results as unexpected as they are impressive on image analysis, changes the general approach and our approaches adapt.

Our achievements:

- Investigation: analysis of multimedia and multilingual data (Text, Sound, Image, Video), facial recognition, translation…

- Health: overhaul of the Consore platform

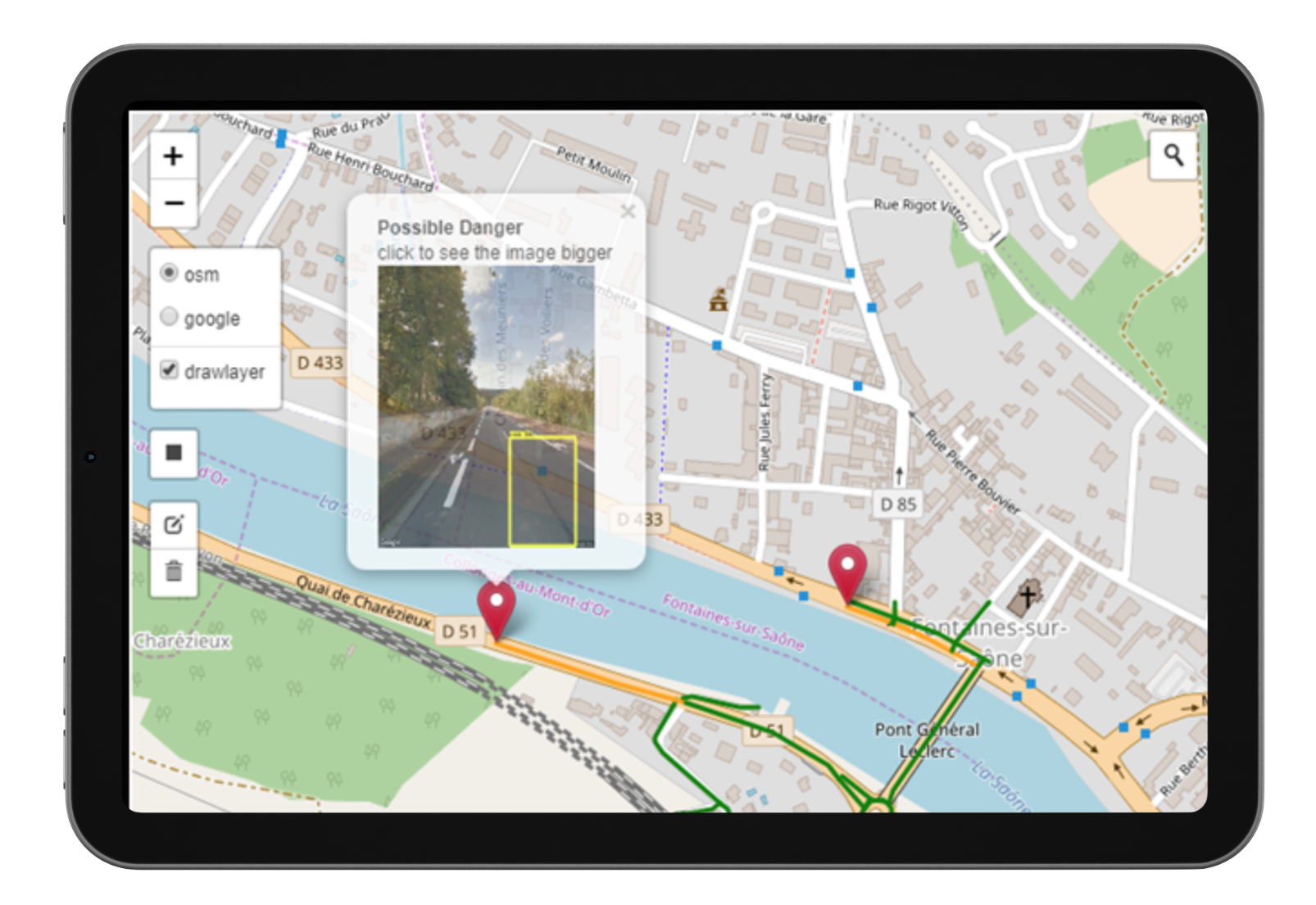

- Road infrastructure: bot for image-based detection of road defects

- Industrial property: overhaul of similarity search and classification (learning based on enriched Symbolic AI results)

- Digital twin: 3D reconstruction of underground stations and automatic recognition of the equipment present

- Nuclear: instantaneous translation for training in nuclear waste reprocessing

- Access control: facial recognition and validation of identity documents

Connectionist AI: how machines learn like the human brain

Connectionist AI:

combining machine learning and deep learning approaches. Rules are no longer coded, they are learned.

Our view: We see no limit to the highly versatile approach of these architectures and the ability to specialise existing AI.

The downside is that nothing is magic yet, and the majority of achievements are based on supervised learning and require a set of data annotated by experts that is not always available or is poorly annotated or even biased.

In 2022, generative AI exploded onto the mainstream via OpenAI and its chatGPT platform built on LLM (Large Language Model). These LLMs have been developed thanks to the famous Transformers defined in 2017 in a revolutionary article written by a Google team, ‘Attention is all you need’.

Coexya uses, prototypes and monitors Generative AI.

- Coexya software factory: integration of GithubCopilot and Starcoder AI into development environments: pair-programming, analysis, bug-finding, analysis assistance, optimisation, etc.

- Search: prototyping of response generation based on private documentary corpora (fine-tuned LLM)

- Analytical: prototype for assistance in resolving investigation cases

- Analytical: prototype to help analyse quantitative results

Generative AI :

Component that generates text, images, sound, video, presentations based on prompts.

Our verdict: After AI’s ability to understand content, the generative dimension naturally comes into its own. It’s an exocortex that needs to be tamed to make it a relevant and versatile assistant in a number of generative and analytical tasks, with varying degrees of creativity. If you don’t, you’ll be stuck with it!

Are you ready for generative AI? Test your maturity with our quiz!

Find out where your company stands on its journey towards artificial intelligence using our interactive quiz, and receive a personalised diagnosis to help you move forward.

Discover Microsoft 365 Copilot with Coexya

At Coexya, we help you get to grips with Microsoft 365 Copilot, a revolutionary generative artificial intelligence solution.

What we offer:

- Discovery: Explore Copilot’s functionalities and identify the opportunities adapted to your organisation.

- Preparation: Set up a secure environment and prepare your data to maximise benefits.

- Facilitate: Deploy adoption programmes and customise Copilot to meet your specific needs.

Why invest in Copilot?

Because you want to :

- Increase productivity and reduce time spent on repetitive tasks.

- Encourage creativity with innovative tools.

- Guarantee secure and responsible AI.

Jean-Louis, Coexya's Technical Director, talks to us about Artificial Intelligence

Discover the history of AI

Discover the history of AI

How was AI born?

AI, a vast subject. It all started by rebranding old systems known as expert systems, which were initially designed to replace experts by encoding all their knowledge. At that time, researchers dreamed of capturing everything they could from experts, replicating it, and using that knowledge to infer all kinds of systems.

These systems somewhat failed because they faced rejection, especially from the experts themselves, notably in medicine. Replacing decision-making with a system was not acceptable at all. On the contrary, it took several years for people to understand that these tools were not meant to replace experts but to assist them.

From that point on, expert systems were replaced by a term that was a bit more marketing-oriented: Artificial Intelligence. The term AI resurfaced and gained traction with developments in image processing and analysis using deep neural networks, particularly convolutional networks, to analyse, understand, and segment image content.

The name Artificial Intelligence was then reused in various contexts, encompassing algorithms, logistic regressions, and probabilistic models that allow decision-making. Essentially, we have a set of expected results, and we learn how those results are obtained.

This approach is entirely different from what was done previously. It no longer relies on rules; we rely less on experts and more on data to enable learning. Of course, there are algorithms that don’t require data, but they are not the ones most commonly used today.

What are the different types of AI?

What are the different types of AI?

What are the different types of AI?

In AI, once the term was established, several approaches emerged. We can explain this retrospectively. Starting from the mid-2010s, particularly around 2015, we saw the rise of image processing through deep neural networks, now referred to as connectionist AI.

Looking back, what were we doing before that? Just prior to this, we relied on rule-based systems, now called symbolic AI, for comparison. These systems used rules like "if A, then B" and so on. They were easy to explain but very challenging to implement because domain expertise was required to define the rules effectively.

By 2015, particularly in the field of image processing and analysis, deep neural networks appeared, producing simply astonishing results. In 2015, facial recognition algorithms surpassed human performance.

This marked a level of achievement never reached with symbolic AI. Thus, connectionist AI was born, relying on deep neural networks and data-driven learning. This shift eliminated the absolute need for a domain expert to define rules but introduced the critical need for representative data relevant to the elements being processed.

The movement accelerated further in 2017, following the publication of a scientific paper by Google’s research teams. They demonstrated the capacity for machines to learn from large datasets. This led to the development of what we now call Large Language Models (LLMs), which underpin what we refer to today as generative AI.

What is Coexya's approach to AI?

What is Coexya's approach to AI?

What is Coexya's approach to AI?

Since the launch of ChatGPT by OpenAI, the entire world has realized that understanding is important, but generating is also a real complement in the analysis of language and discourse in a broad sense. We are beginning to have genuine interactions with highly relevant responses in different languages and on various topics. Integrating this into our development models provides significant added value.

Today, our added value lies in blending all our domain expertise at Coexya—whether it’s geographical data, healthcare data, or legal data—with our technical skills in integrating these types of models. This combination ensures our contribution to the continued development of AI at Coexya, a journey that began back in 2009.

What are Coexya's major AI-based projects?

What are Coexya's major AI-based projects?

What are Coexya's major AI-based projects?

Our first project using these technologies dates back to 2009, carried out for the Ministry of Health, focusing on emergency patient care and the tools that could assist doctors in making decisions. For example, identifying if a patient has a certain medical history or is on anticoagulants despite having an open wound. All this information provides doctors with critical elements to make faster decisions. We worked on this significant project as part of the patient medical record initiative.

We also developed a comprehensive platform for industrial property analysis, specifically for shape recognition in trademarks and patents. This included recognizing logos, classification, and measuring similarity between logos to prevent unfair competition.

In the healthcare field, particularly in oncology, we are proud of our work on the CONSORE project. This initiative focuses on analyzing oncological pathologies and identifying key elements to help clinical researchers detect patient cohorts through the analysis of unstructured data.

Today, we are at a stage where, beyond understanding, we are generating, which is the foundation of generative AI, enabling us to draw conclusions. Our projects now are diverse, primarily in the form of proof-of-concept initiatives. These involve managing large volumes of data and integrating this type of technology with connectionist AI to enhance the overall system.